I might be dating myself, but when I was a kid, I remember my parents talking about New Math. I don’t believe that I was ever exposed to New Math, it might not have made it to the small towns in northwestern Ohio! The New Math Wiki was actually very interesting; taking us back to the cold war with Russia, attempting to drive up the American scientific and mathematical skills. For better or worse, New Math was not able to change the status quot and was abandoned.

I might be dating myself, but when I was a kid, I remember my parents talking about New Math. I don’t believe that I was ever exposed to New Math, it might not have made it to the small towns in northwestern Ohio! The New Math Wiki was actually very interesting; taking us back to the cold war with Russia, attempting to drive up the American scientific and mathematical skills. For better or worse, New Math was not able to change the status quot and was abandoned.

Not really the point of this blog, but New Math actually stressed the mathematical concept of set theory. This is actually a concept that one of my mentors actually spoke about quite often. Something that I suggest everyone do a little reading on, especially if you are not familiar with the concept.

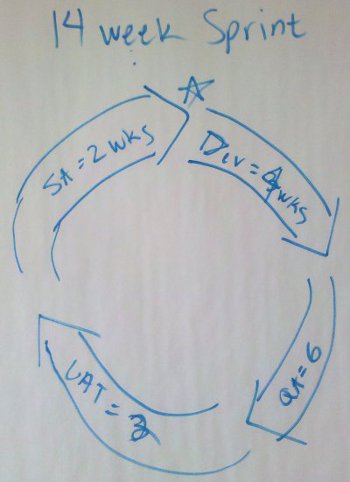

So, why New Agile? A friend happened to snap a picture from a white board at his place of employment, illustrating their new 14 week Agile process. Oh, where to begin…. I going to skip the obvious 14 week concept and tie this back to one of my previous posts on testing. I asked the question: Should testing be the limiting factor in the software development process? I am not exactly sure what numbers were used per cycle, as it seemed like it was a discussion point, but it does highlight an interesting trend.

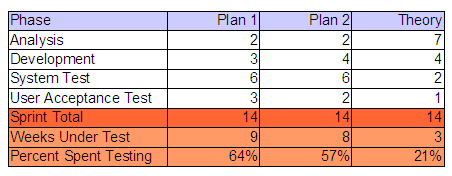

I threw together this little a table to illustrate my point. Based on either combination of values, the project was going to allocate between 57% and 64% of it’s project schedule to testing. Using the worst case numbers, we are talking about 3 times longer to test the code, than actually develop the code. Is it just me or is that crazy? What if you had two (2) months worth of development? Simple math, that would require six (6) additional months to validate! I realize there are or can be several factors contributing to this equation, but I’m pretty sure that we are not talking about a team of twelve (12) developers to one (1) tester, creating an unbalanced capacity situation. Just for fun, I threw a Theory column into my table. I can’t remember where I learned or read this, but I’m sure everyone knows this fact as well… the cost of fixing bugs. We should be spending the vast majority of our software development resources on upfront planning; this would include requirements, analysis and design. It would seem that if developers knew exactly what there building and testers knew exactly what they were testing, both teams could do a much higher quality job, probably in less time!

I threw together this little a table to illustrate my point. Based on either combination of values, the project was going to allocate between 57% and 64% of it’s project schedule to testing. Using the worst case numbers, we are talking about 3 times longer to test the code, than actually develop the code. Is it just me or is that crazy? What if you had two (2) months worth of development? Simple math, that would require six (6) additional months to validate! I realize there are or can be several factors contributing to this equation, but I’m pretty sure that we are not talking about a team of twelve (12) developers to one (1) tester, creating an unbalanced capacity situation. Just for fun, I threw a Theory column into my table. I can’t remember where I learned or read this, but I’m sure everyone knows this fact as well… the cost of fixing bugs. We should be spending the vast majority of our software development resources on upfront planning; this would include requirements, analysis and design. It would seem that if developers knew exactly what there building and testers knew exactly what they were testing, both teams could do a much higher quality job, probably in less time!

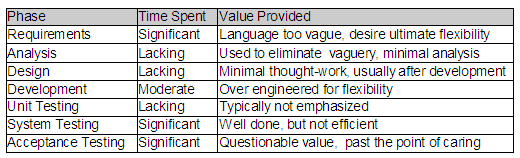

Unfortunately, we seem to be spending too much of our time in the wrong SDLC phase and on the wrong artifacts. I can’t tell you how many times I have seen a hundred (100) page requirements document for a seemingly trivial system. The SDLC mandates that these documents be produced, irregardless of the content’s quality. This is no fault of the business, they simply try to document their needs, given what they know. Because requirements are typically not allowed to evolve and are seldom interpreted correctly by the IT team, the business never gets what they really need. Additionally, the time spent by the testing teams to provide the traceability back to the excessively verbose requirements, while generating and documenting their test cases could almost be considered SDLC overhead. The testers are rarely given a chance to actually automate their test cases, which in my opinion, is the most valuable artifact of their job.

Unfortunately, we seem to be spending too much of our time in the wrong SDLC phase and on the wrong artifacts. I can’t tell you how many times I have seen a hundred (100) page requirements document for a seemingly trivial system. The SDLC mandates that these documents be produced, irregardless of the content’s quality. This is no fault of the business, they simply try to document their needs, given what they know. Because requirements are typically not allowed to evolve and are seldom interpreted correctly by the IT team, the business never gets what they really need. Additionally, the time spent by the testing teams to provide the traceability back to the excessively verbose requirements, while generating and documenting their test cases could almost be considered SDLC overhead. The testers are rarely given a chance to actually automate their test cases, which in my opinion, is the most valuable artifact of their job.

If we could produce smaller, more concise, valuable requirement documents, spend more time doing actual analysis and design work, we could actually take steps toward my Theory column. This approach could and should enable the later phases of the SDLC to be completed with less effort, fewer issues, and in less time. So two questions:

- Is this not common sense?

- Why is this approached not embraced by technology organizations? The benefits seem unquestionable…

I was reading the

I was reading the  I actually found two versions of the document by the author, Steve Pieczko. The

I actually found two versions of the document by the author, Steve Pieczko. The

Earlier this month, Kent tweeted about the “Pragmatic Magazine”. I had been to the “

Earlier this month, Kent tweeted about the “Pragmatic Magazine”. I had been to the “